واقع مزيد

الواقع المزيد إنگليزية: Augmented reality تقنية معلوماتية حديثة نستطيع تحويل الصورة الحقيقية إلى صورة افتراضية على شاشة الحاسوب. مثلا يمكن أن تصبح حركة اليد البشرية أمام كاميرا صغيرة حركة لحيوان أسطوري على شاشة الكومبيوتر

وقد أصدرت شركة sony لعبة على محاورة البلاي ستيشن 3 عام 2007 تعتمد على هذه التقنية وهناك الكثير من المشاريع حاليا لاستخدام تقنية الواقع المزيد في ما يسمى الفن الرقمي.

عند تسويق الآيفون لأول مرة سنة 2007، صعد المنصة جون دير، وهو أحد الشركاء في صندوق الاستثمار الضخم كلينر بركنيس ، وقال: 'فكروا في الأمر، لديكم في جيبكم جهاز مرتبط بالانترنيت في كل لحظة، جهاز شخصي، يعرف من أنتم وأين أنتم. هذا أمر بالغ الأهمية، وأهم من الحاسوب الشخصي'. وفعلا فقد تحققت قول دير، ففي خلال السنتين الأخيرتين، رأينا كيف قدم الآيفون عالما جديدا من التجارب المتصلة فيما بينها بواسطة برامج وبرمجيات مختلفة. بما أن الواقع المزيد يستخدم نظام تحديد المكان بهدف عرض معلومات، فيجب ان يعرف ليس فقط موقع الجهاز، وانما أيضا الاتجاه الذي تم توجيه الكاميرا اليه، ولهذا الهدف هو بحاجة الى بوصلة داخلية في الجهاز. وطبعا كما يعلم الجميع فان النسخة الجديدة من الأيفون 3GS لديها بوصلة داخلية. يذكر أن شركة أبل طرحت مؤخرا ARkit – وهي عبارة عن رزمة من الأدوات المبنية على أساس برمجيات مفتوحة المصدر وذلك بهدف تطوير برمجيات AR. وأبلغت أبل المطورين أن البرمجيات المبنية على أساس ARلن تكون متوفرة في حوانيت أبل قبل شهر ايلول/سبتمبر 2010. يذكر ايضا أن أبل هي الشركة الثانية التي تقوم بفتح امكانيات الواقع المزيد في هواتفها، وذلك بعد نظام التشغيل للهواتف الخلوية من غوغل (اندروئيد) الذي تم فتح امكانيات الواقع المزيد فيها. الواقع المزيد والذكاء البيئي (AMI) إننا نستخدم الذكاء البيئي بهدف وصف ردود فعل الأجهزة الأكترونية على التغييرات، الحركات ونتائج التعامل مع المعلومات الديناميكية، مثل تشخيص السيارات بواسطة كاميرات على الطريق السريع أو حتى اضاءة أو اطفاء المصابيح بناء على معطيات تقدمها مجسات. دمج المعطيات التي تقدمها الأجهزة الداعمة لتقنية الواقع المزيد،

تعريف

There are two commonly accepted definitions of Augmented Reality today. One was given by Ronald Azuma in 1997 [1]. Azuma's definition says that Augmented Reality

- combines real and virtual

- is interactive in real time

- is registered in 3D

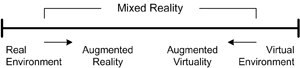

Additionally Paul Milgram and Fumio Kishino defined Milgram's Reality-Virtuality Continuum in 1994 [2]. They describe a continuum that spans from the real environment to a pure virtual environment. In between there are Augmented Reality (closer to the real environment) and Augmented Virtuality (is closer to the virtual environment).

More recently, the term augmented reality has been blurred a bit due to the increased interest of the general public in AR.

التاريخ

- 1957-62: Morton Heilig, a cinematographer, creates and patents a simulator called Sensorama with visuals, sound, vibration, and smell.[3]

- 1966: Ivan Sutherland invents the head-mounted display suggesting it was a window into a virtual world.

- 1975: Myron Krueger creates Videoplace that allows users to interact with virtual objects for the first time.

- 1989: Jaron Lanier coins the phrase Virtual Reality and creates the first commercial business around virtual worlds.

- 1992: Tom Caudell coins the phrase Augmented Reality while at Boeing helping workers assemble cables into aircraft.

- 1992: L.B. Rosenberg developes one of the first functioning AR systems, called VIRTUAL FIXTURES, at the U.S. Air Force Armstrong Labs, and demonstrates benefit on human performance.[4][5]

- 1992: Steven Feiner, Blair MacIntyre and Doree Seligmann present first major paper on an AR system prototype, KARMA, at the Graphics Interface conference. Widely cited version of the paper is published in Communications of the ACM next year.

- 1999: Hirokazu Kato (加藤 博一) develops ARToolKit at the HITLab and it is demonstrated at SIGGRAPH that year.

- 2000: Bruce H. Thomas develops ARQuake, the first outdoor mobile AR game, and is demonstrated in the International Symposium on Wearable Computers.

- 2008: Wikitude AR Travel Guide launches on Oct. 20, 2008 with the G1 Android phone.

- 2009: AR Toolkit is ported to Adobe Flash (FLARToolkit) by Saqoosha, bringing augmented reality to the web

browser

التقنيات

العتاد

The main hardware components for augmented reality are: display, tracking, input devices, and computer. Combination of powerful CPU, camera, accelerometers, GPS and solid state compass are often present in modern smartphones, which make them prospective platforms for augmented reality.

شاشات العرض

There are three major display techniques for Augmented Reality:

I. Head Mounted Displays

II. Handheld Displays

III. Spatial Displays.

Head Mounted Displays

A Head Mounted Display (HMD) places images of both the physical world and registered virtual graphical objects over the user's view of the world. The HMD's are either optical see-through or video see-through in nature. An optical see-through display employs half-silver mirror technology to allow views of physical world to pass through the lens and graphical overlay information to be reflected into the user's eyes. The HMD must be tracked with a six degree of freedom sensor.This tracking allows for the computing system to register the virtual information to the physical world. The main advantage of HMD AR is the immersive experience for the user. The graphical information is slaved to the view of the user. The most common products employed are as follows: MicroVision Nomad, Sony Glasstron, and I/O Displays.

Handheld Displays

Handheld Augment Reality employs a small computing device with a display that fits in a user's hand. All handheld AR solutions to date have employed video see-through techniques to overlay the graphical information to the physical world. Initially handheld AR employed sensors such as digital compasses and GPS units for its six degree of freedom tracking sensors. This moved onto the use of fiducial marker systems such as the ARToolKit for tracking. Today vision systems such as SLAM or PTAM are being employed for tracking. Handheld display AR promises to be the first commercial success for AR technologies. The two main advantages of handheld AR is the portable nature of handheld devices and ubiquitous nature of camera phones.

Spatial Displays

Instead of the user wearing or carrying the display such as with head mounted displays or handheld devices; Spatial Augmented Reality (SAR) makes use of digital projectors to display graphical information onto physical objects. The key difference in SAR is that the display is separated from the users of the system. Because the displays are not associated with each user, SAR scales naturally up to groups of users, thus allowing for collocated collaboration between users. SAR has several advantages over traditional head mounted displays and handheld devices. The user is not required to carry equipment or wear the display over their eyes. This makes spatial AR a good candidate for collaborative work, as the users can see each other’s faces. A system can be used by multiple people at the same time without each having to wear a head mounted display. Spatial AR does not suffer from the limited display resolution of current head mounted displays and portable devices. A projector based display system can simply incorporate more projectors to expand the display area. Where portable devices have a small window into the world for drawing, a SAR system can display on any number of surfaces of an indoor setting at once. The tangible nature of SAR makes this an ideal technology to support design, as SAR supports both a graphical visualisation and passive haptic sensation for the end users. People are able to touch physical objects, and it is this process that provides the passive haptic sensation. [1] [6] [7] [8]

Tracking

Modern mobile augmented reality systems use one or more of the following tracking technologies: digital cameras and/or other optical sensors, accelerometers, GPS, gyroscopes, solid state compasses, RFID, wireless sensors. Each of these technologies have different levels of accuracy and precision. Most important is the tracking of the pose and position of the user's head for the augmentation of the user's view. The user's hand(s) can tracked or a handheld input device could be tracked to provide a 6DOF interaction technique. Stationary systems can employ 6DOF track systems such as Polhemus, ViCOM, or Ascension.

Input Devices

This is a current open research question. Some systems, such as the Tinmith system, employ pinch glove techniques. Another common technique is a wand with a button on it. In case of smartphone, phone itself could be used as 3D pointing device, with 3D position of the phone restored from the camera images.

Computer

Camera based systems require powerful CPU and considerable amount of RAM for processing camera images. Wearable computing systems employ a laptop in a backpack configuration. For stationary systems a traditional workstation with a powerful graphics card. Sound processing hardware could be included in augmented reality systems.

Software

For consistent merging real-world images from camera and virtual 3D images, virtual images should be attached to real-world locations in visually realistic way. That means a real world coordinate system, independent from the camera, should be restored from camera images. That process is called Image registration and is part of Azuma's definition of Augmented Reality.

Augmented reality image registration uses different methods of computer vision, mostly related to video tracking. Many computer vision methods of augmented reality are inherited form similar visual odometry methods.

Usually those methods consist of two parts. First interest points, or fiduciary markers, or optical flow detected in the camera images. First stage can use Feature detection methods like Corner detection, Blob detection, Edge detection or thresholding and/or other image processing methods.

In the second stage, a real world coordinate system is restored from the data obtained in the first stage. Some methods assume objects with known 3D geometry(or fiduciary markers) present in the scene and make use of those data. In some of those cases all of the scene 3D structure should be precalculated beforehand. If not all of the scene is known beforehand SLAM technique could be used for mapping fiduciary markers/3D models relative positions. If no assumption about 3D geometry of the scene made structure from motion methods are used. Methods used in the second stage include projective(epipolar) geometry, bundle adjustment, rotation representation with exponential map, kalman and particle filters.

أنظر أيضا

- Alternate reality game

- Augmented browsing

- Augmented virtuality

- Camera resectioning

- Computer-mediated reality

- Cyborg

- Head Mounted Display,

- Mixed reality

- Simulated Reality

- Virtual retinal display

- Virtuality Continuum

- Virtual reality,

- Video Glasses,

- Wearable computer.

المراجع

- ^ أ ب R. Azuma, A Survey of Augmented Reality Presence: Teleoperators and Virtual Environments, pp. 355–385, August 1997.

- ^ P. Milgram and A. F. Kishino, Taxonomy of Mixed Reality Visual Displays IEICE Transactions on Information and Systems, E77-D(12), pp. 1321-1329, 1994.

- ^ http://www.google.com/patents?q=3050870

- ^ L. B. Rosenberg. The Use of Virtual Fixtures As Perceptual Overlays to Enhance Operator Performance in Remote Environments. Technical Report AL-TR-0089, USAF Armstrong Laboratory, Wright-Patterson AFB OH, 1992.

- ^ L. B. Rosenberg, "The Use of Virtual Fixtures to Enhance Operator Performance in Telepresence Environments" SPIE Telemanipulator Technology, 1993.

- ^ Ramesh Raskar, Spatially Augmented Reality, First International Workshop on Augmented Reality, Sept 1998

- ^ David Drascic of the University of Toronto is a developer of ARGOS: A Display System for Augmenting Reality. David also has a number of AR related papers on line, accessible from his home page.

- ^ Augmented reality brings maps to life July 19, 2005

الروابط الخارجية

| واقع مزيد

]].Research groups

- Interactive Multimedia Lab, National University of Singapore, Singapore

- Augmented Environments Lab, GVU Center, Georgia Institute of Technology

- Wearable Computer Lab, South Australia

- HITLab, Seattle

- HITLab NZ, Christchurch New Zealand

- TU Munich

- Studierstube, Graz University of Technology, Austria

- Columbia University Computer Graphics and User Interfaces Lab led by Prof. Steven Feiner

- Projet Lagadic IRISA-INRIA Rennes

- Magic Vision Lab

Other